5 things you need to know about AI and Machine Learning

For many years we’ve been helping clients with the application of Artificial Intelligence and Machine Learning. While it can appear complex and intimidating, it’s not so bad once you understand a few key concepts.

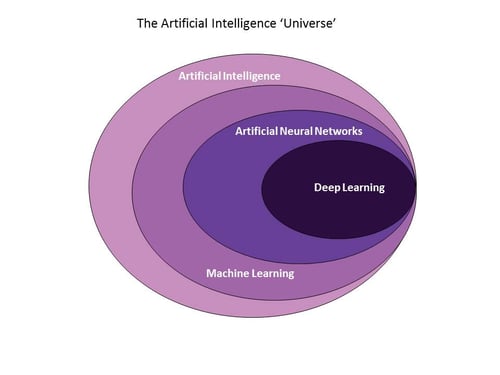

1. Getting the big picture — what is Artificial Intelligence?

Artificial Intelligence (AI) is a sector characterised by buzzwords and hype — so how do you cut through the noise? Think of AI as the superset — and everything else being a subset of it. Put another way, AI is the universe and things like Machine Learning, Neural Networks and Deep Learning are the solar systems that it’s made up of. Broken-down in more detail, AI and its main components stack up like the image to the right.

- Artificial Intelligence is the theory and delivery of computer systems that perform functions we normally associate with human intelligence — things like vision, speech recognition, translation, and decision making. The type of AI we see in consumer and business applications today is typically a single-solution tool, created to solve a unique problem — for example; sat-nav route planning, recommendation engines, and voice response PAs like Apple’s Siri. Each performs a narrowly defined task very well. All were considered futuristic once, but are now a part of everyday life.

- Machine Learning. All you have to do is give the machine access to data and it will learn for itself right? Not quite. Machine learning can be supervised — where a learning dataset is analysed first, and its learnings applied to new input data and predictions made. Or it can be unsupervised, where desired outcomes are not known in advance and input data is unlabelled. With unsupervised machine learning, the goal is not the production of a ‘right’ answer, but instead to learn more about the characteristics of the data itself.

- Artificial Neural Networks are modelled on the biological neural networks of animal brains. They learn by applying a range of algorithms (the set of rules followed in computer science and analytics for computer problem solving) to input data and comparing initial outputs to a desired result. The system is continually adjusted until the difference between the output and the desired result is at an acceptable level.

- Deep learning is a process that mimics the non-linear way human beings learn. A deep learning system has many interconnected processing layers — hence the word ‘deep’. Across each layer of the network, an algorithm analyses input data and produces an output. The next layer takes this output, performs analysis, and produces its own output — at each step refining and building on the learning from the previous layer. Iterations continue until the output reaches the required level of accuracy.

Another common misconception has to do with the difference between AI and automation - click here to read another article that clarifies the difference.

2. If AI is a hammer – not every business problem is a nail

Business media is abuzz with all things AI, and for many decision makers there’s a growing fear that if they’re not implementing some sort of AI solution they’re missing out. There’s no need to panic. From a business perspective AI solutions are just tools like any other — sometimes they’re the right one for the job and other times they’re not — and the fundamentals still apply. In order for an AI tool to be useful or a Machine Learning or Deep Learning project to be successful the following are required:

- A problem or opportunity needs to be identified

- The right people with the right skills need to be involved in framing the issue and defining objectives

- The appropriate data needs to be sourced, prepared, and analysed to achieve the desired outcomes

3: It’s much much faster than it used to be

What is new (and deserving of the hype) is that advances in technology mean that the speed at which AI solutions can be created, deployed, and start delivering value is increasing. When Datamine first started working in the AI space twenty years ago we could have spent days processing calculations in a computer model — something we can now do in milliseconds. Ultimately this means practical use of AI solutions for a wider range of problems and lower processing costs. But beware; speed isn’t better if you’re headed in the wrong direction. The key to successful deployment of AI is having people in your organisation that can set the business parameters.

The speed at which an application can be developed will be impacted by the toolset you use and how proprietary it is. Google’s TPU chip, for instance, has been designed specifically to work with Google’s Neural Network software TensorFlow (we’re fans), and Microsoft have also begun manufacturing their own chips for its virtual reality solutions. So whether internal, external, or a hybrid of both, choose an AI project team that chooses its tools wisely.

4. Know when to pull AI out of the bag

Some tasks are predisposed to an AI solution — recommendation engines, fraud detection, chat bots, and customer behaviour & prediction analysis — the list is wide ranging. What all of these use cases have in common is that they’re ‘messy’ problems. Their variables will change over time and new patterns will emerge. Fraud is a particularly good example, because you can count on criminals to come up with new and inventive ways to beat the system — an AI solution can detect these changing patterns long before a human investigator.

Another common use for AI (Deep Learning is very good at this) is ‘feature detection’ — where a computer learns to identify features — and can then recognise those features when it sees them again. That could be the spending  patterns of a customer who is likely to churn, or recurrent styles of software code in computer viruses —the potential applications are essentially limitless. For instance, we’ve recently developed a Deep Learning application that can scan satellite imagery and accurately identify property features (e.g. swimming pools) on a grand scale, like in the photo to the left of a Sydney suburb. It’s a great example of the type of work that’s suitable for AI. Yes, somebody could do the same thing manually for a suburb or two – but what about an entire country? That’s big data — a place where AI and Deep Learning comes into its own.

patterns of a customer who is likely to churn, or recurrent styles of software code in computer viruses —the potential applications are essentially limitless. For instance, we’ve recently developed a Deep Learning application that can scan satellite imagery and accurately identify property features (e.g. swimming pools) on a grand scale, like in the photo to the left of a Sydney suburb. It’s a great example of the type of work that’s suitable for AI. Yes, somebody could do the same thing manually for a suburb or two – but what about an entire country? That’s big data — a place where AI and Deep Learning comes into its own.

5. This ain’t DIY — experts will be required

If your business problem is right for AI you’re going to need a team with a diverse skill set. Yes AI projects are all about data, data science, and analytics — so your shortlist should include people with proven expertise in those fields, but don’t just leave them to it. You’ll also need people with the ability to set the business goals, translate ‘geek speak’ so that other stakeholders in the business can understand it, and most importantly, keep the team focused on how the information being generated can be applied to solving business problems.

AI solutions are proven and are being applied to an ever expanding universe of business problems. At the same time, things like Machine Learning and Deep Learning are still a long way from being off-the-shelf plug & play options — and getting expert advice from someone in the know is recommended.

Want to know more about applying AI in your business? Contact us today, or download the Datamine Guide to Predictive and AI Modelling below.

.jpg)

-1.jpg)